If you've never sat through an hour long code review where your work gets torn apart in front of you, then you're not missing out on too much. Kind of.

Code reviews aren't fun, but they can definitely be very useful for organizations in order to keep checks and balances on the quality of the code being produced by their developers. Developers get a benefit here as well, as they get to learn best practices from a more senior developer often times. But really, code reviews designed to increase the quality of the code that a company owns in order to reduce errors, improve performance and increase maintainability.

More recently, I decided to sit down with ChatGPT for a few hours and to see what it thought of some of my latest for loops and functions in a test project that I set up. And the findings were definitely interesting. And not because they were amazing, but also not because they were completely terrible. The results were kind of right in the middle.

ChatGPT

I wasn't too surprised by it's initial reaction to the code that I uploaded. It wasn't the most complex logic overall. Basically, it was a standard jsx file with very basic React hooks and logic doing things such as loading some data from a random data store and rendering it onto the screen.

As such I kept the first prompt very basic and essentially just asked it to "perform a code review on the following code".

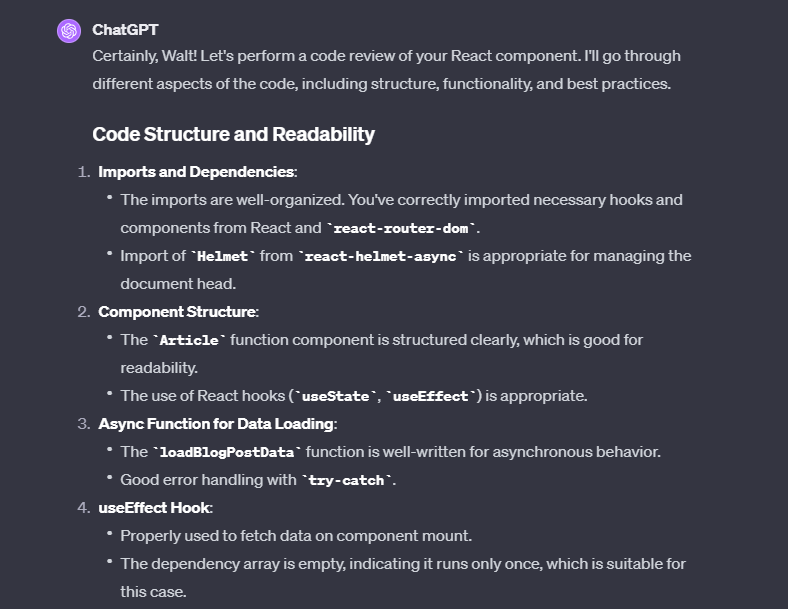

ChatGPT essentially ran through a very basic rundown that any programmer with a few years of experience would expect to get. It checked the overall structure of the code from top to bottom and pretty much stated that everything was as intended. But let's take a deeper look at what exactly it thought based on the screenshot above.

Imports and dependencies - No problems here. I imported various things and apparently they were well-organized.

Component structure - My component is structured clearly. It's kind of difficult to know really what that means in the context of an AI model. Does that mean that my code is up to date and optimized? Or that it runs? Or perhaps it's just a positive affirmation statement and it really has no idea.

Async function and data loading - My function is asynchronous and I have a try-catch. Apparently, that's all I needed to get the green light.

useEffect hook - This is where I fetch the data on the components first load. Really, ChatGPT just seemed to define what a useEffect hook is.

So nothing too in-depth going on with the default code-review process here. If I were to just leave it here, I would assume that my code is top-tier and move on with my work day.

But there is an issue in all of this. You might have noticed that ChatGPT in general was very positive, and it didn't really offer any actual feedback on how to improve any of the code. And that's very different from a real-world code review.

In a real code review scenario, typically the negatives are highlighted much more than any of the positives. Everyone likes positive words and notes of encouragement, some of the time, but the real value of the code review process is to be critical and to consistently move towards improvement.

However, that can be fixed by simply asking ChatGPT to be more critical in its assessment. I purposefully made a few notable mistakes just to see if it would mention them in its response. And to my surprise, it indeed pointed out a few of things.

ChatGPT

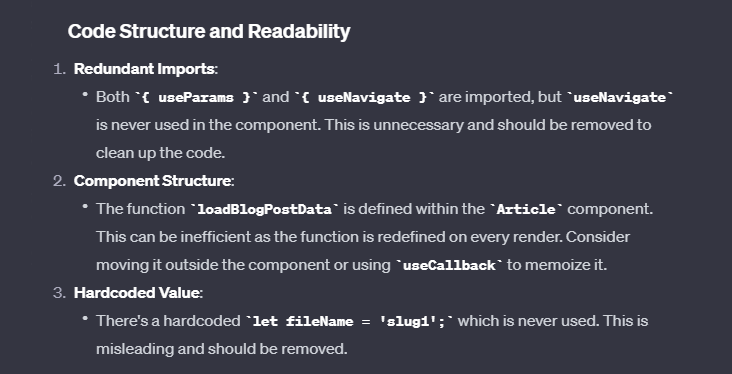

This is more aligned with what I personally expect a code review to be. It's less positive in tone and more action oriented. So let's break down a few of the mentioned items.

Redundant imports - While I technically did not use useNavigate in my code, I did declare a variable with the useNavigate function call. So I'm kind of unsure whether ChatGPT knew that, or whether this was a mistake on its part.

Component structure - This one I agree with. The function that actually loads the data should be defined within the useEffect hook for better performance.

Hardcoded values - This one was interesting, because I wasn't quite sure what the complaint was. Was it that there's a hardcoded value in my code, or that it was never used? Both technically are issues, however the critique in this case was kind of vague.

Catching security issues

Security usually falls second (or third) when it comes to developing code often times. Typically right after just getting the thing to work. But it should definitely be at the forefront in this day and age, particularly with the increasing number of cyberattacks and vulnerabilities that get discovered almost weekly now.

And code reviews are the place to cover such things.

ChatGPT

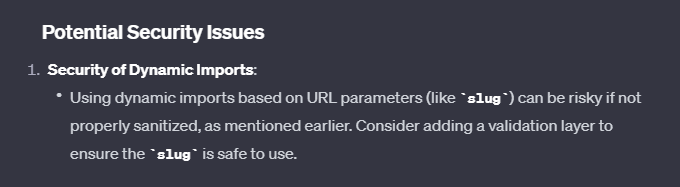

It's interesting to note how the default code review provided by ChatGPT was void of any security concerns or areas of improvement when it comes to security. It seemed to have opted for a more optimistic and positive tone overall, at the possible expense of having lesser quality code.

Having said that, I liked the issue pointed out by ChatGPT in this case. Data should always be sanitized when coming from an unknown or external source. It would have been nice if it would have provided the code as an example, but further prompting could indeed lead to that result.

The last test that I performed with ChatGPT was essentially to be even more strict and to list all potential security vulnerabilities and essentially to just go hard on the code.

And the results were kind of hit or miss once again.

For example, when it came to SEO improvements ChatGPT was able to point out that I should consider adding structured data with JSON-LD to enhance my SEO and provide a richer indexing experience.

JSON-LD, or JSON for Linking Data, is a lightweight Linked Data format based on JSON and it can indeed help search engines better understand the content of a website. And that's a great point to make, because it wasn't something that I even considered.

ChatGPT was able to provide actual steps that any developer should take in order to improve the codebase, such as the following:

ChatGPT

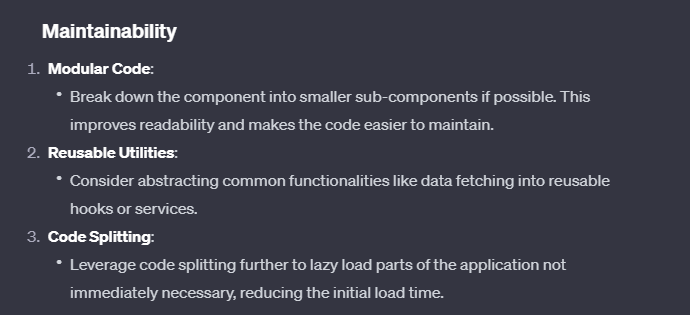

The list above may look like common sense topics that shouldn't even need to be discussed because they are so simple, but the truth is, these often times get ignored in the daily confusion of corporate work.

When I first began to code professionally, it was rare that anyone brought up reusability, modularity and deferring of code execution. And that's because these aren't exactly simple to implement topics. They typically require a different way of thinking than the traditional "Make a function", "Calculate a value", "Return a value" workflows that are synonymous with being a programmer.

It was also able to point out a few features that are often missed by newer developers, such as proper loading states and feedback messages during error conditions.

ChatGPT

However, I wasn't able to tell whether ChatGPT actually looked for these things in my code, or if it was simply just suggesting best practices. Both of which are important of course, but it was difficult to determine just how much analysis actually went into the code review.

At the end of the day though, ChatGPT did what it was suppose to. It took code that was relatively simple and it pointed out a few issues and suggested a few areas of improvement, while also reiterating a few best practices. I can only assume that if the code were highly more complex and involved, that the feedback provided would also match that. So kudos to you ChatGPT, you won this round.